labs.beatcraft.com

Armadillo

fluentd/log collecting server †

This article explains how to set up fluentd at the log collecting server. This fluentd receives the logs, which are sent by the fluentd at the gateway side. (The details of how t set up fluentd at a gateway are described in the article of Armadillo-Box WS1/fluentd.

The logs, which fluentd has received, has been stored into elasticsearch and mongodb. The logs in elasticsearch are used at kibana, which allows you to monitor the results via web.

OS †

As of November 2015, the newest server version of LTS (Long term support), Ubuntu 14.04 LTS Server is used for the log collecting server.

The configurations of host name and fixed IP address, and the rule setting of firewall, which is installed for security measurement, are configured, depending on the network environment you use.

In this article, the host server (log collecting server) are configured as they are listed below.

Host Name: aggregator

User Name: beat

MongoDB †

Please install from the repository of MongoDB, not from repository of Ubuntu. To install MongoDB, please follow the instructions listed at Install MongoDB on Ubuntu.

Registering the public key for the certification of the repository †

beat@aggregator:~$ sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 7F0CEB10

Executing: gpg --ignore-time-conflict --no-options --no-default-keyring --homedir /tmp/tmp.0CJX1sXM4s --no-auto-check-trustdb \

--trust-model always --keyring /etc/apt/trusted.gpg --primary-keyring /etc/apt/trusted.gpg --keyserver hkp://keyserver.ubuntu.com:80 --recv 7F0CEB10

gpg: requesting key 7F0CEB10 from hkp server keyserver.ubuntu.com

gpg: key 7F0CEB10: public key "Richard Kreuter <richard@10gen.com>" imported

gpg: Total number processed: 1

gpg: imported: 1 (RSA: 1)

Adding the package list of MongoDB †

beat@aggregator:~$ echo "deb http://repo.mongodb.org/apt/ubuntu "$(lsb_release -sc)"/mongodb-org/3.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-3.0.list

Obtaining the file list of newly added repository †

beat@aggregator:~$ sudo apt-get update

Installing MongoDB †

From the newly added MongoDB repository, please install mongodb package (mongodb-org).

beat@aggregator:~$ sudo apt-get install mongodb-org

Dealing with the warning of mongo shell †

After the installation of deb package is completed, MongoDB is basically in the state that can be used immediately. However, as you access to mongo shell by mongo commands, the warning against Transparent Huge Pages will appear. To deal with this warning, please follow the instructions listed at the official document.

Create disable-transparent-hugepages under the directory of /etc/init.d/.

The content of page is listed below.

#!/bin/sh

### BEGIN INIT INFO

# Provides: disable-transparent-hugepages

# Required-Start: $local_fs

# Required-Stop:

# X-Start-Before: mongod mongodb-mms-automation-agent

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Disable Linux transparent huge pages

# Description: Disable Linux transparent huge pages, to improve

# database performance.

### END INIT INFO

case $1 in

start)

if [ -d /sys/kernel/mm/transparent_hugepage ]; then

thp_path=/sys/kernel/mm/transparent_hugepage

elif [ -d /sys/kernel/mm/redhat_transparent_hugepage ]; then

thp_path=/sys/kernel/mm/redhat_transparent_hugepage

else

return 0

fi

echo 'never' > ${thp_path}/enabled

echo 'never' > ${thp_path}/defrag

unset thp_path

;;

esac

As the file is created, please configure MongoDB to implement this file at booting the system.

beat@aggregator:~$ sudo chmod 755 /etc/init.d/disable-transparent-hugepages

beat@aggregator:~$ sudo update-rc.d disable-transparent-hugepages defaults

After MongoDB is rebooted, please sure that no warring shows up as applying mongo shell.

beat@aggregator:~$ mongo

MongoDB shell version: 3.0.7

connecting to: test

>

elasticsearch †

To visualize the collected logs, please install elasticsearch, a full-text search server, and it works with Kibana. elasticsearch stores the logs, which are sent form Armdillo-Box WS1, in logstash form.

Installing java †

To execute elasticserach, please install java. Java is a requirement and necessity for executing elasticserach. As of November 2015, the newest version of java is Java 8, and it will be installed.

beat@aggregator:~$ sudo add-apt-repository ppa:webupd8team/java

beat@aggregator:~$ sudo apt-get update

beat@aggregator:~$ sudo apt-get install oracle-java8-installer

Installing elasticsearch †

To follow the official document, please add the repository, and install elasticsearch from the repository.

beat@aggregator:~$ wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

beat@aggregator:~$ echo "deb http://packages.elastic.co/elasticsearch/1.7/debian stable main" | sudo tee -a /etc/apt/sources.list.d/elasticsearch-1.7.list

beat@aggregator:~$ sudo apt-get update

beat@aggregator:~$ sudo apt-get install elasticsearch

As the installation is completed, please configure elasticsearch to boot as service when the server starts up.

beat@aggregator:~$ sudo update-rc.d elasticsearch defaults 95 10

Accessing port 9200, please check that elasticsearch works correctly.

If you receive the reply shown below, it indicates that elasticsearch works fine.

beat@aggregator:~$ curl -X GET http://localhost:9200/

{

"status" : 200,

"name" : "Red Wolf",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "1.7.3",

"build_hash" : "05d4530971ef0ea46d0f4fa6ee64dbc8df659682",

"build_timestamp" : "2015-10-15T09:14:17Z",

"build_snapshot" : false,

"lucene_version" : "4.10.4"

},

"tagline" : "You Know, for Search"

}

Adjusting elasticsearch †

Refer to Configuration and Running as a Service on Linux at the official document, please adjust the maximum number of files that can be opened and the maximum amount of memory that will be allocated to elasticserach.

Please add the two lines shown below at /etc/security/limit.conf

elasticsearch - nofile 65535

elasticsearch - memlock unlimited

Please add the single line shown below at /etc/elasticsearch/elasticsearch.yml, and it prevents the memory, which is allocated for elasticserach, to be swapped.

bootstrap.mlockall: true

Please add the three lines listed below. These additions are corresponding to the two previous additions.

ES_HEAP_SIZE=1g <-- half amount of physical memory

MAX_OPEN_FILES=65535

MAX_LOCKED_MEMORY=unlimited

Apache †

Install Apache2, which is downloaded from the repository of Ubuntu.

beat@aggregator:~$ sudo apt-get install apache2

Its configuration remains as the default. The document root and /var/www are remains at the initial setting.

Depends on your needs, please apply any security measures.

Kibana †

Installing Kibana3 †

Download Kibana3, and put it to the document root of Apache.

beat@aggregator:~$ bwget https://download.elastic.co/kibana/kibana/kibana-3.1.2.tar.gz

beat@aggregator:~$ tar xvf kibana-3.1.2.tar.gz

beat@aggregator:~$ mv kibana-3.1.2 kibana3

beat@aggregator:~$ sudo mv kibana3 /var/www/html/

Adjusting the additional configuration †

To open up logstash logs of elasticsearch from kibana3, please add the two lines listed below at Security section of /etc/elasticsearch/elasticsearch.yml.

http.cors.allow-origin: "/.*/"

http.cors.enabled: true

After adding these lines, please restart elasticsearch and imolment the new configuration.

beat@aggregator:~$ sudo /etc/init.d/elasticsearch restart

[sudo] password for beat:

* Stopping Elasticsearch Server [ OK ]

* Starting Elasticsearch Server

Confirming the operation †

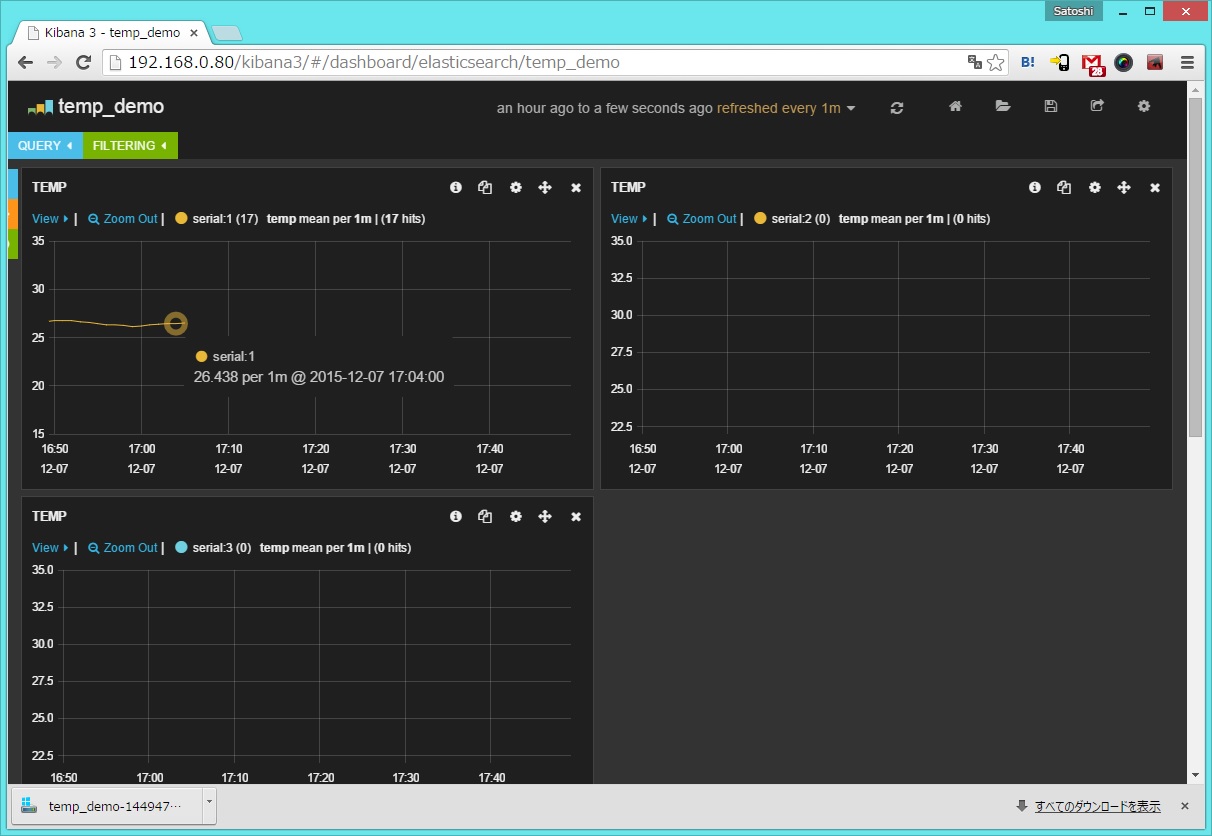

Open up a web browser, and go to the page allocated to Kibana3, htto://{IP Address of the log collecting server}/kibana3/. When kibana3 and elasticsearch work correctly, the dashboard of kibana3 appears on the browser.

fluentd †

Increasing ulimit †

Before installing fluentd, please increase ulimit. To increase ulimit, please follow the instruction, which is listed at Before installing

Please add the three lines listed below at the end of the config file, which is located at /etc/security/limits.conf.

root soft nofile 65536

root hard nofile 65536

* soft nofile 65536

* hard nofile 65536

After rebooting it, please check the change becomes effective.

beat@aggregator:~$ ulimit -n

65536

Installing fluentd †

To install fluentd, please follow the instructions, which are listed at Installing Fluentd Using Ruby Gem.

(gem is the package management tool.)

First, install packages, which are requirements for gem based installation.

beat@aggregator:~$ sudo apt-get install build-essential

beat@aggregator:~$ sudo apt-get install ruby ruby-dev

Then, install fluentd by gem.

beat@aggregator:~$ sudo gem install fluentd --no-ri --no-rdoc

Fetching: msgpack-0.5.12.gem (100%)

==Skipping==

Fetching: string-scrub-0.0.5.gem (100%)

Building native extensions. This could take a while...

Fetching: fluentd-0.12.15.gem (100%)

Successfully installed msgpack-0.5.12

Successfully installed json-1.8.3

Successfully installed yajl-ruby-1.2.1

Successfully installed cool.io-1.3.1

Successfully installed http_parser.rb-0.6.0

Successfully installed sigdump-0.2.3

Successfully installed thread_safe-0.3.5

Successfully installed tzinfo-1.2.2

Successfully installed tzinfo-data-1.2015.5

Successfully installed string-scrub-0.0.5

Successfully installed fluentd-0.12.15

11 gems installed

As fluentd is installed, the packages of its dependencies are also installed, simultaneously.

fluentd elasticsearch plug-in †

A plug-in, which transfers logs from fluentd to elasticsearch and saves them in logstash form, is installed. Please install the required libraries with apt-get first, then, install the plug-in with gem.

beat@aggregator:~$ sudo apt-get install libcurl4-openssl-dev

beat@aggregator:~$ sudo gem install fluent-plugin-elasticsearch

Fetching: excon-0.45.4.gem (100%)

~~ Skipping ~~

Fetching: fluent-plugin-elasticsearch-1.0.0.gem (100%)

Successfully installed excon-0.45.4

Successfully installed multi_json-1.11.2

Successfully installed multipart-post-2.0.0

Successfully installed faraday-0.9.1

Successfully installed elasticsearch-transport-1.0.12

Successfully installed elasticsearch-api-1.0.12

Successfully installed elasticsearch-1.0.12

Successfully installed fluent-plugin-elasticsearch-1.0.0

8 gems installed

fluent mongo plug-in †

Install the plug-in, which sends logs from fluentd and saves them in MongoDB.

beat@aggregator:~$ sudo gem install fluent-plugin-mongo

Fetching: bson-1.12.3.gem (100%)

Fetching: mongo-1.12.3.gem (100%)

Fetching: fluent-plugin-mongo-0.7.10.gem (100%)

Successfully installed bson-1.12.3

Successfully installed mongo-1.12.3

Successfully installed fluent-plugin-mongo-0.7.10

3 gems installed

Anther fluent plugin †

To write down the configuration file of fluentd simply, please install fluent-plugin-forest.

https://rubygems.org/gems/fluent-plugin-forest

beat@aggregator:~$ sudo gem install fluent-plugin-forest

Fetching: fluent-plugin-forest-0.3.0.gem (100%)

Successfully installed fluent-plugin-forest-0.3.0

1 gem installed

fluentd.conf †

To receive the log data from fluentd, which is configured in the article of Armadillo-Box WS1/fluentd, to fluentd at the server, the configuration file, fluent.conf, for fluentd at server is written as it is shown below.

<source>

@type forward

@id forward_input

</source>

<match syslog.**>

@type forest

subtype copy

<template>

<store>

type elasticsearch

logstash_format true

host localhost

port 9200

index_name fluentd

type_name syslog

flush_interval 10s

buffer_chunk_limit 2048k

buffer_queue_limit 5

buffer_path /data/tmp/es_syslog/${hostname}.${tag_parts[1]}.${tag_parts[2]}.${tag_parts[3]}

buffer_type file

</store>

<store>

type mongo

host localhost

port 27017

database fluentd

collection adv

capped

capped_size 4096m

flush_interval 10s

buffer_chunk_limit 8192k

buffer_queue_limit 512

buffer_path /data/tmp/mongo_syslog/${hostname}.${tag_parts[1]}.${tag_parts[2]}.${tag_parts[3]}

buffer_type file

</store>

<store>

type file

path /data/tmp/syslog/${hostname}.${tag_parts[1]}.${tag_parts[2]}.${tag_parts[3]}.log

buffer_path /data/tmp/syslog/${hostname}.${tag_parts[1]}.${tag_parts[2]}.${tag_parts[3]}

flush_interval 10s

buffer_chunk_limit 8192k

buffer_queue_limit 512

buffer_type file

</store>

</template>

</match>

As buffer_type is set to file, path, which is generated as a file is created, is needed to be set in advance.~

For this example, three individual paths, for elasticsearch, mongodb, and file, are needed to be created under /data/tmp/ in advance.

Checking its operation †

To start up fluentd with the log option, please check whether fluentd can handle the four tasks listed below or not.

- Receive logs from fluentd at Armadillo-Box WS1.

- Send and save the logs into Elasticsearch.

- Save the logs in MongoDB.

- Output to the file.

To check these tasks, please apply the command lines below.

beat@aggregator:~$ sudo -s

root@aggregator:~# fleuntd -c /etc/fluent/fluent.conf -o /var/log/fluent.log &

root@aggregator:~# tail -f /var/log/fluent.log

Once you have recognized that fluentd operates correctly, please open up kibana3.

The address of kibana3 is http://{IP address of collecting server}/kibana3/.

As log data are getting collected, the temperature graph are shifted in the kibana3 dashboard, which is capable of displaying the fluctuation of temperature between 15 degrees Celsius and 35 degrees Celsius.

Once you have checked that all system works appropriately, the use of setup-fluentd-initscript.sh is recommended. setup-fluentd-initscript.sh allows fluentd to start up simultaneously as the log collecting server starts up. setup-fluentd-initscript.sh can be downloaded from the URL below.

https://gist.github.com/Leechael/3671811

Revision History †

- 2015-12-08 This article is initially released.

![[PukiWiki] [PukiWiki]](image/bc_logo.png)

![[PukiWiki] [PukiWiki]](image/bc_logo.png)